Phone That Can View Like We Do

Smartphones are getting smarter, Superkids. They not only know the time, know its location on the globe, know its orientation and movement, know the ambient light and air pressure, and even will know its surroundings. They’re getting closer to humans sense.

The latest concept for acurate sensing of location is delivered by Google. The Project Tango concept for smartphones can connect users to information or people far away. The project is designed to gain a better understanding of the immediate area around its user.

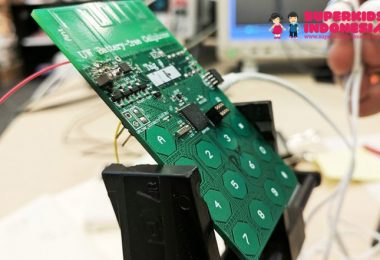

The prototype is a 5-inch smartphone containing customized hardware and software designed to track the full 3D motion of the device, while creating a map of the environment. It merges the physical and digital worlds, just like humans use visual cues to interact with environments.

Project Tango isn’t Android, but runs on it. The prototype offers development APIs to help Android apps built on Java, C/C++ and the Unity Game Engine to learn data about the phone’s position, orientation and depth.

So far, it’s sort of blocky and bulky. It’s got a 4-megapixel camera, an additional motion-tracking camera, and a third depth-sensing camera. Together with a pair of processors optimized for computer vision calculations, the phone tracks its absolute position and orientation in 3D more than 250,000 times a second–all while making a virtual 3D map of whatever it’s pointing at. It looks like someone crammed an Xbox Kinect sensor into a smartphone.

Well, so far it’s still a prototype and a very experimental endeavor. Interested developers can apply for a kit for Project Tango.

TEGUH WAHYU UTOMO

PHOTOS: GOOGLE

Indonesia

Indonesia